Zach Leiberman is the tech whizz turning sounds into visuals, and by visuals, we mean bursts of white blobs followed by cascades of shapes.

Augmented Reality artist Zach Lieberman uses Apple’s AR Kit and OpenFrameworks to show what speaking jibberish in your living room looks like, and the results are pretty cool.

Making “Click,” “psh,” “ah,” “oorh” and “eee” sounds, Leiberman proves actions don’t speak louder than words as large shapes pop up with every noise he makes. The more he speaks, the more it looks as though he’s created some kind of worm hole.

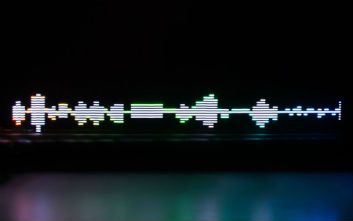

Using Simultaneous Localisation and Mapping (SLAM), a software which creates a connection between the phone’s sensors and camera and maps out the room’s boundaries, Leiberman has built his own real-time sound map, turning sound into a visualisation.

Using the phone’s microphone, Leiberman blurts outs some strange noises before speaking normally, creating an abstract illustration of sound waves. With the noises creating blobs, it’s interesting to see the string of sentences create thinner, more structured visuals adapting with his tone. Moving his phone along, it then acts as a replay tool, playing the chunk of audio back and forward depending on where his device moves.

Viewing this experiment only as a draft, Leiberman hopes the app will ultimately be able to remember the position of the sound waves after it’s closed.

“It knows where you paint the sound in space relative to where you open the app,” Leiberman told Wired. “But it doesn’t know that space is in Brooklyn or at this intersection or in this room.”

The app is just a taste of what augmented reality can do, and the experiences it’s capable of creating.

Via Wired.