Essential to the evolution of video games is digital technology. The cutting-edge field of digital tech crystallised in the late 1950s with the invention of the MOSFET (metal-oxide-semiconductor-field-effect-transistor, for those of you playing at home).

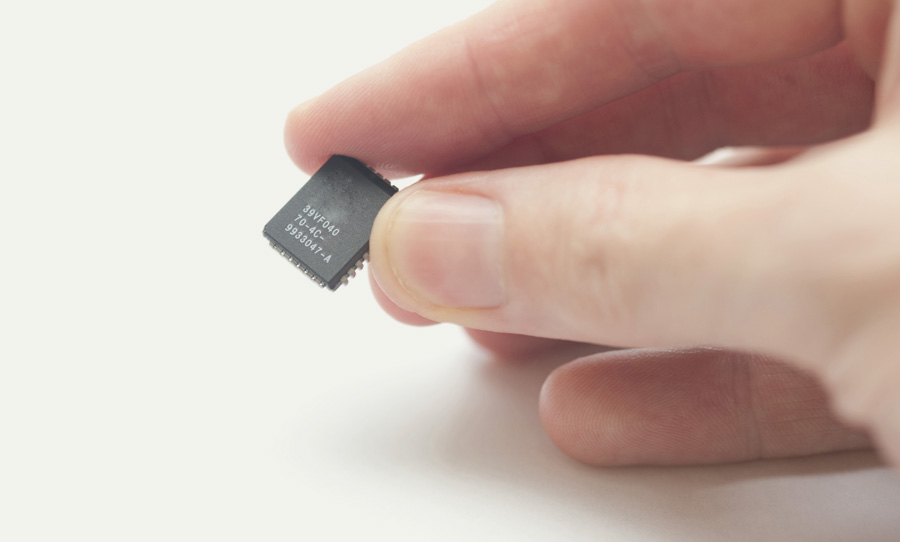

Used to control electronic signals via a semiconductor, typically silicon, they are central to microchips and memory chips. The humble MOSFET, created by Mohammad Atalla and Dawon Kahng, has rolled off the factory floor more that 13 sextillion times. It’s no exaggeration that this tiny device has altered the course of humanity.

The miniaturisation of electrical switches has enabled massive computing brains to be crammed onto a small silicon chip. Throughout the video game era, the miniaturisation and therefore the power of the microchip has increased exponentially, creating the gaming experiences that we take for granted today.

So let’s marvel at the influence of digital technology on gaming throughout history and imagine where it could take us in the future.

Video games wouldn’t be what they are today without digital technology. Let’s explore how games of the past used these resources and what it could mean for the future.

Experimental era

In the middle period of the 20th-century, a technological upheaval was underway driven by the U.S. and the U.S.S.R.—the two global superpowers that emerged from the Second World War. Much of the headlines were seized by the threat of nuclear war and the space race. But there was also a large investment in smaller tech that would go on to have far-reaching implications.

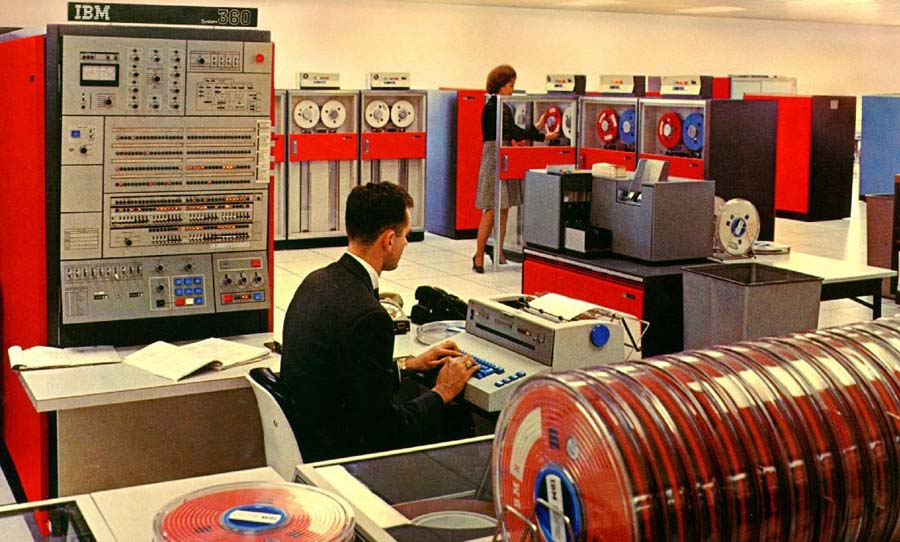

In the early ’60s, Steve Russell of Massachusetts Institute of Technology developed the first game that was playable on a computer. Spacewar (very much in the zeitgeist) pitted two spaceships against each other in a torpedo battle to the death.

This was early days in the field of digital technology and any Simpsons fan could tell you that the computers of this age weren’t commercially viable. It would be another decade or so before microchip technology improved enough for the seminal Pong to make its debut.

Gaming comes home

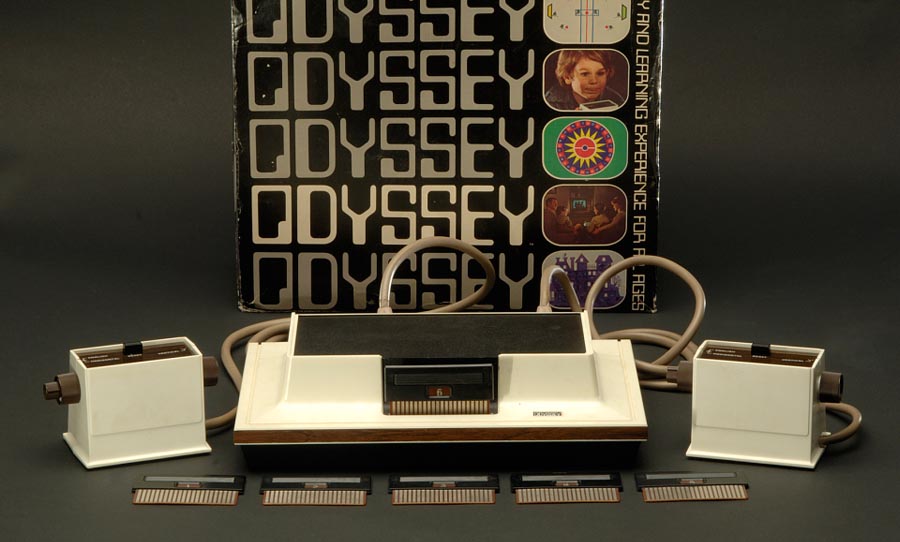

The Magnavox Odyssey hit the shelves in 1972 and made history as the first gaming console to be made for the domestic market. It relied on removable circuit cards rather than the cartridges that became popular soon after.

In 1975, the Atari Pong console arrived. Even in this short amount of time, significant technical improvements were made, courtesy of the microchip. The first cartridge-based console was the Fairchild Channel F, coming along in 1976. Plus, it had colour graphics!

As a sign of the increasing power, investment, and commercial viability of digital technology and domestic gaming in general, Nintendo had entered the gaming arena, Apple developed its first desktop computer and Atari released the game-changing 2600 console. This all happened by the end of the ’70s.

Exponential growth

By the ’80s, computers that were powered by microprocessors were the norm. Right up until the present day, it’s easy to trace the capability gaming system by following a particularly crucial specification: Random Access Memory (RAM).

The RAM spec is integral in gaming because it acts as the console or computer’s short term memory. Handling the dynamic, fluctuating needs of game action is the job of the RAM chip, so the bigger it is, the smoother the gaming experience.

The Commodore 64 was one of the most popular gaming devices of the ’80s, housing a 64 kilobytes of RAM. 8GB of RAM is a pretty typical amount for computers today (which is even a quite modest amount by today’s standards), which 8,000,000 kilobytes.

In the decades between the Commodore 64 and today, there has been an exponential increase in computer and console power, resulting in a more immersive experience. The lower size of memory within systems of the ’80s (think Sega Master System and Nintendo Entertainment System) meant graphics and audio quality had hard limits. Alongside microprocessor development, subsequent generations of console raised the bar for speed, sound and visual engagement.

Eye to the future

Microchips that previously housed dozens of transistors for controlling electrical signals now contain billions. The speed at which CPUs and RAM chips are developed and released give PC gamers an advantage in the tech stakes compared with their counterparts in console-land: it’s years in between drinks for consoles, whereas on PCs, you can customise your machines more quickly.

It’s actually really fun: just head on over to MWave, Aftershock or the like, build your own custom PC and marvel at the specs available to you. Though you can go crazy building your own epic gaming PC, the PS5 and Xbox Series X, both of which are due to arrive later on in the year, lay down some pretty amazing stats of their own. For instance, the PS5 is slated to ship with an octo-core AMD Zen-2 CPU and 16 GB of RAM, putting it on par with workstations that are typically for video-editing or audio purposes.

So what does the future hold? Digital tech development has shown no signs of slowing down. As we seek to incorporate microprocessors increasingly into our lives it must surely translate into an increase in power for gaming systems, well into the future.